Case Study: Enabling Preliminary 24/7 Health Support with Google Assistant

A. Let's Start with The Why?

To begin with, this conversational chatbot is one of my side project that I develop during the COVID-19 lockdown. Therefore, as how I used to write in my other posts, let's start with the question: why this project matters?

Well, the pandemic certainly transformed the way we interact by shifting a large part of our daily routines online. Therefore, this chatbot is built to address the increased demand for digital tools that facilitate remote interactions, specifically for providing 24/7 preliminary health advices. Now, the next question is, how do I do it?

There are various options of tools to design and develop a conversational assistant or what's commonly known as chatbots. For this project, I am using what's known as Google Actions. What's that?

Google Actions is a voice and text-based conversational interfaces that allow users to design, experiment, test and publish chatbots that directly integrated with Google Assistant, which then accessible to an estimate of ~3.6 billion users with wide varieties of devices from phones, tablets, smartwatch and more. Hence, that's why Google coined the term Next Billion Users which emphasized on making product accessible and easy to adopt for everyone, including designers and developers. Pretty interesting, right?

However, why healthcare assistant is the chosen approach? isn't Googling sufficient enough?. Well, that's a good argument, although there are couple of specific reasons why an intelligent assistant (built on top of Google Assistant) is helpful for this specific case:

1. Support users with limited mobility → When sick, physical and brain mobilities are prohibited to operate excessively to speed up user's recovery process. In other words, I will have to take a bedrest all the time while enduring certain periodic pain such as headache, stomacache, rising body temps and in some cases, vomit. Therefore, having to manually use my phone to search and type isn't ideal for such circumstances. This is where voice health assistant plays it's role to assist users in providing useful health advices and suggestions, especially during night time where everyone is asleep

2. Accessible for people with special needs → Some of readers may think, what about people with visual impaired or hearing loss? how can healthcare assistant supports them?. Good question. As mentioned before, the reason why I specifically use Google Actions for this project is because of it's seamless integration with Google Assistant that's also empowered by Google Disability Support tools such as Live Transcribe and more. Therefore, the assistant that I developed, lives in an inclusive end-to-end ecosystem that also support people with special needs, not just a standalone application

3. Access Anytime → There's no limit such as opening hours and the needs to make appointment. Users can directly ask the assistant for advices anytime. This can be especially useful for individuals who may be experiencing minor symptoms or are seeking general healthcare information outside of traditional office hours

B. Let's Continue with The How?

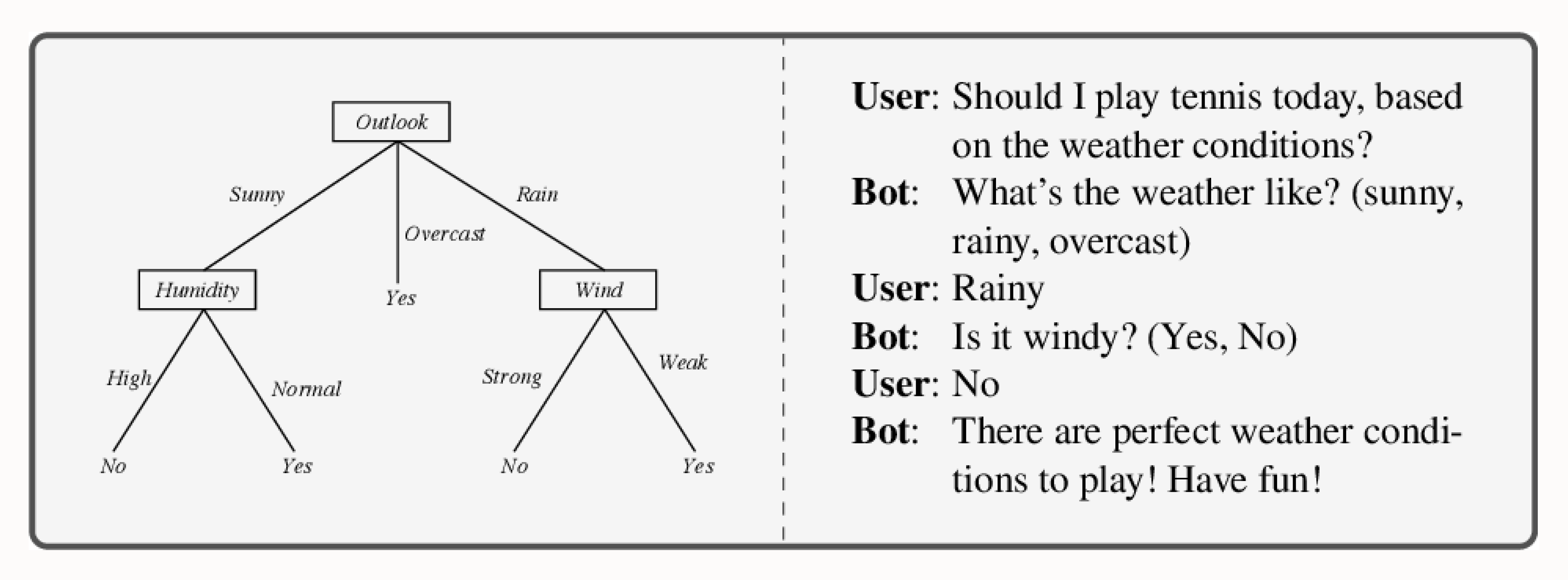

In general, intelligent assistant or chatbot development are commonly known to use Decision Tree logic for it's conversational design best practice. In programming, this is known as If-Else statement:

As seen by the image example above, If-Else statement is commonly used due to it's simplicity to evaluate wide varieties of user's input and response cases as part of the conversation flow

Therefore, the first logical step is to collect and map user's input and response examples that will be used as bot's learning references. To achieve this, I need to collect a reliable data source that is relevant to my goal: To build a reliable 24/7 healthcare assistant that provides helpful preliminary advices from home

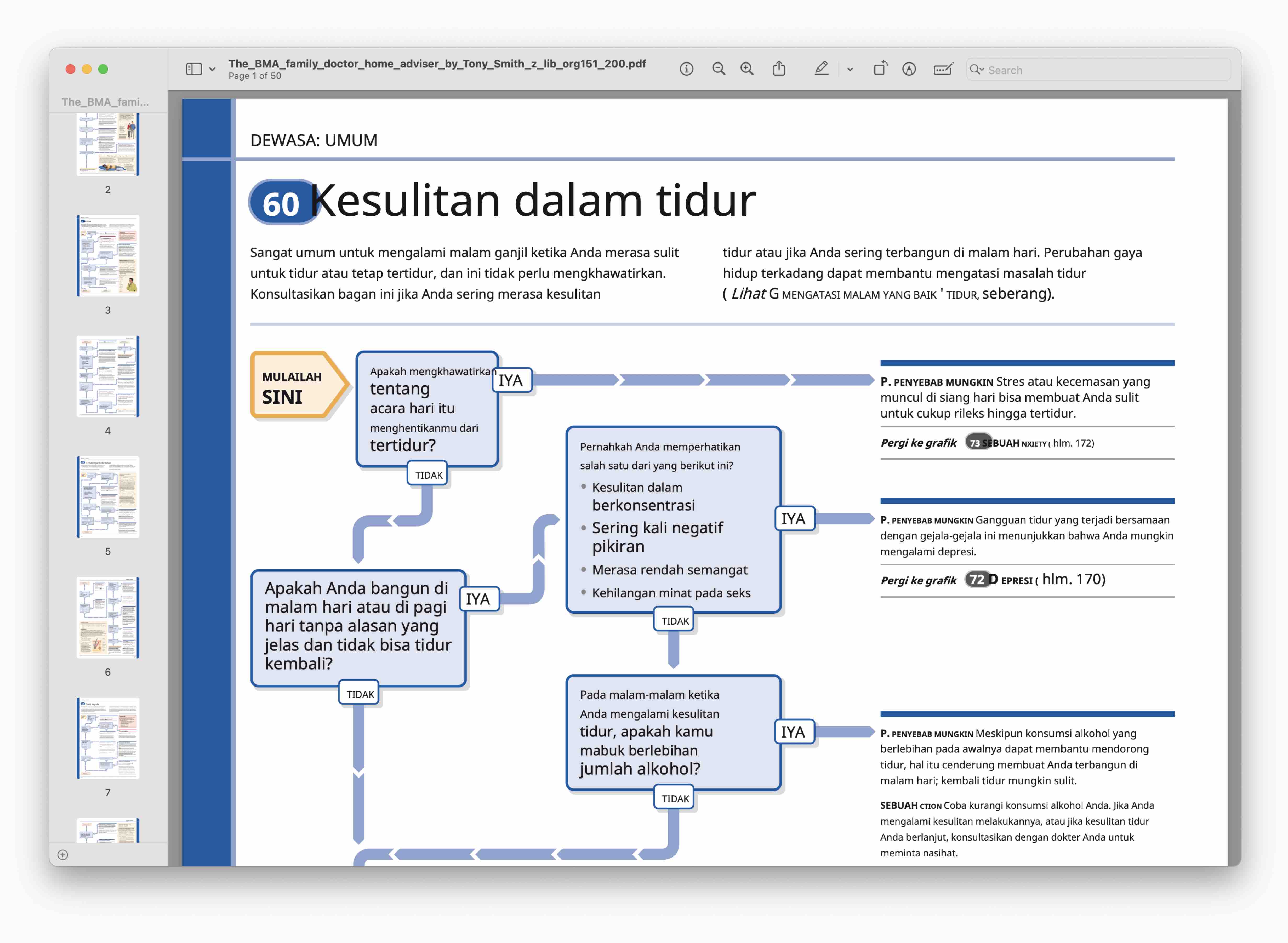

In that regards, I use Dr. Tony Smith's Family Doctor Home Adviser book as the assistant's primary learning reference or data source. Here's the example:

Furthermore, there are three main reasons to why I am using Dr. Tony Smith's book as the data source for this project:

1. First and foremost, the book provides easy-to-understand explanations of common medical conditions and illnesses, as well as practical advice for managing symptoms and staying healthy.

2. Dr. Tony Smith's medical advice is clearly written and visualized in Decision Tree logic (shown in point 2 in the image above), which is incredibly useful for me to translate the flow into the health assistant

3. The book is written by a reliable expert which is Dr. Tony Smith himself who also wrote for British Medical Association and a deputy for British Medical Journal (BMJ). He also masterminded other home medical advice books such as Family Health Guide (1972), The Medical Risks of Life (1977), Family Doctor (1986), Practical Family Health (1990), Drugs and Medication (1991), The Complete Family Health Encyclopedia (1995), Guide to Healthy Living (1993) with Dr Stephen Carroll, and Adolescence, The Survival Guide for Parents & Teenagers (1994) with Elizabeth Fenwick. What a man!

Therefore, now that we have a reliable data source, the next thing to do is to map user's input and response information from the book, into the health assistant. In other words, it's development time!

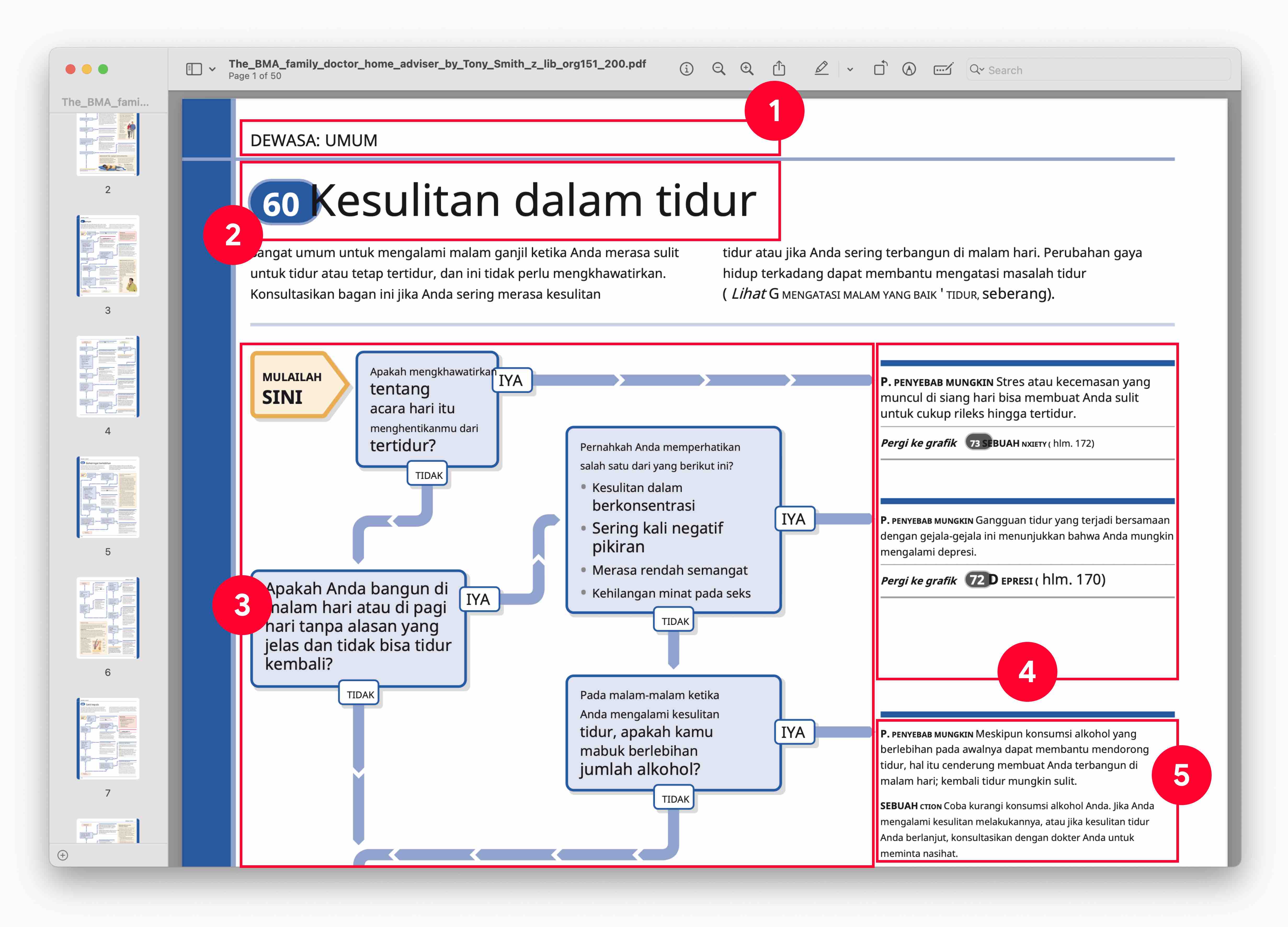

To begin the mapping process, I start by sorting out key information from Dr. Tony's book into several components (shown in the image above) which can be added to Google Actions, broken down into the following points:

1. Age Group → This is an important information when defining sickness symptoms because different age groups may experience and respond to illnesses differently. As an example, young children and elderly individuals may have weaker immune systems and be more susceptible to certain specific illnesses. Additionally, certain symptoms may be more prevalent in specific age groups

2. Disease Name → This information helps the assistant to provide relevant information result based on user's input and response when interacting with the healthcare assistant

3. Intents and Prompts → Firstly, intents are used to identify the user's intention or reason for engaging with the assistant. This helps the assistant to respond appropriately and accurately to the user's needs. Moreover, prompts are used to guide the user through the conversation, providing helpful prompts or suggestions to ensure that the user gets the information they need. For instance, if a user is unsure about the symptoms they are experiencing, the assistant can prompt the user to describe their symptoms in detail, ask follow-up questions, and provide relevant information about potential diagnoses or treatment options.

4. Continued symptomatic results → In real world, certain diseases may have similar symptoms. Therefore, this information is useful for the assistant to identify other possible disease type based on symptom similarities, and provide relevant suggestions for the users. As an example, if users say no to all 'Flu' prompts, the assistant may be able to connect with Mild Fever and other disease to understand user's condition much better. That's why Dr. Tony Smith wrote "continue to chapter X" which is related to other disease that users may encounter

5. Final results → This is the part when the assistant provides practical advice for the users if their input and response is matched with certain disease types

Phew! – now that I've collected and identified all the information components, the next step will be translating those information in Google Actions. Let's begin!

C. Let's see the result!

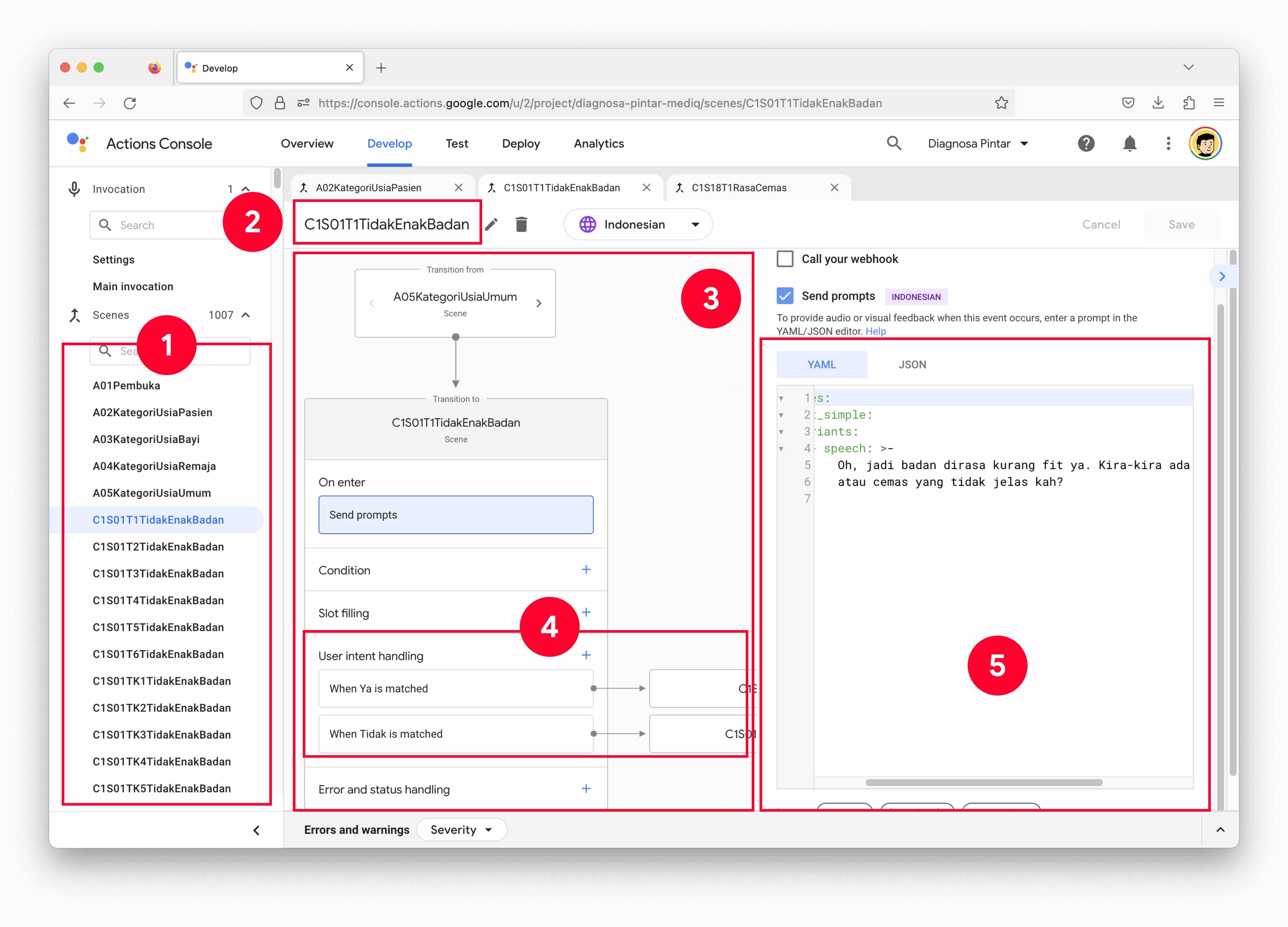

Voilà, this is an overview from the translation result that I did in Google Actions

1. List of Scene → A scene represents a specific visual interface that a user interacts with during a conversation with the Assistant. In Figma or Photoshop, scene is known as Frame or Layer. Therefore, for this case, scene is useful to group intents and prompts, into specific disease types, which is correlated to Point 2-4 as well

2. Disease Name → This is related to the disease name information from the book, which is helps provide relevant information result based on user's input and response when interacting with the healthcare assistant

3. Intents and Prompts → This is also related to what I previously mentioned during information component sorting process from the book

4. User Intent Handling → This is related to Continued Symptomatic Results information component in the book

5. Custom Prompt Editor → This is the part where as a designer, I can make the bot interact more humanely by adding a bit of artificial emotions with slang vocabularies in Bahasa. As an example, "oh gitu ya", "wah, hmm, kira-kira" which replicates human interaction behavior in real life

Furthermore, let's take a look at couple of demo previews that I did on Google Home and mobile devices!

D. Learnings

I personally believe that if we can digitize the overall content from Dr. Tony Smith's book, this conversational bot would be immensely useful to make a staple impact in the healthcare world, especially to provide better access to quality healthcare information for the masses.

However, since Google is sunsetting the custom conversational bot capabilities on June 13th 2023, it might be a good thing to move and replicate this project to other tools such as Dialogflow.

Nonetheless, this project truly shows that designing conversational assistant is more than just visual crafting. It's the art of making data speaks to the users. Thank you for reading! ❤️